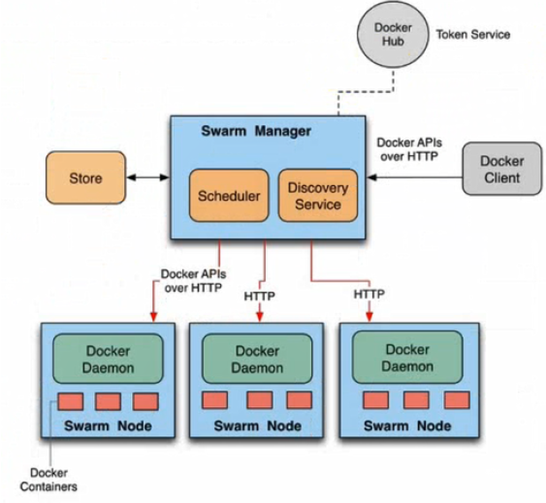

介绍

需要以下环境

- Kubernetes集群

- Blackbox工具

- Grafana、Prometheus监控

大致功能:通过在K8s集群中部署blackbox工具(用于监控服务,检查网络可用性)和Grafana、Prometheus(监控可视化面板)更直观的体现网络连通性,可以进行警报和分析

本文章通过若海博客的【Kubernetes 集群上安装 Blackbox 监控网站状态】和【Kubernetes 集群上安装 Grafana 和 Prometheus】整合而成

部署Kubernetes集群(Ubuntu/Debian操作系统)

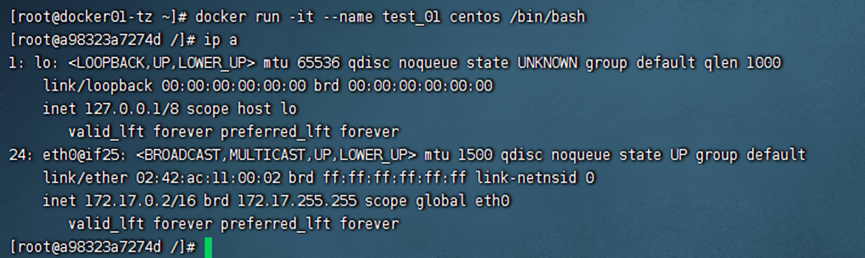

确保主节点和子节点都有Docker环境(最好是同一个版本)

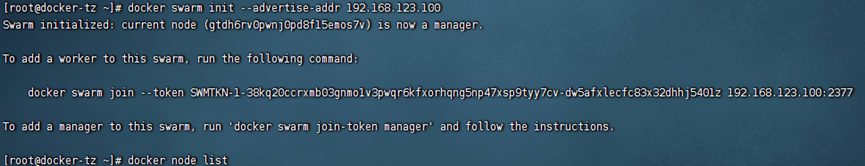

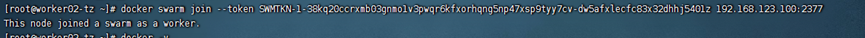

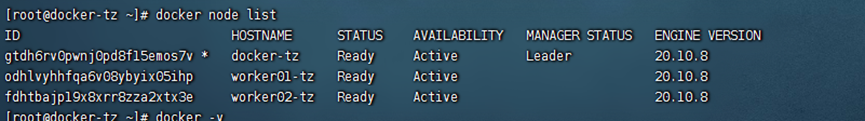

主节点

//安装Docker,一键安装(如有安装可以忽略)

curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun

//开启docker、并设置开机自启

systemctl start docker & systemctl enable docker

apt update

apt install -y wireguard

echo "net.ipv4.ip_forward = 1" >/etc/sysctl.d/ip_forward.conf

sysctl -p /etc/sysctl.d/ip_forward.conf

//以下Token值请保存,任意字符串

export SERVER_TOKEN=r83nui54eg8wihyiteshuo3o43gbf7u9er63o43gbf7uitujg8wihyitr6

export PUBLIC_IP=$(curl -Ls http://metadata.tencentyun.com/latest/meta-data/public-ipv4)

export PRIVATE_IP=$(curl -Ls http://metadata.tencentyun.com/latest/meta-data/local-ipv4)

export INSTALL_K3S_SKIP_DOWNLOAD=true

export DOWNLOAD_K3S_BIN_URL=https://github.com/k3s-io/k3s/releases/download/v1.28.2%2Bk3s1/k3s

if [ $(curl -Ls http://ipip.rehi.org/country_code) == "CN" ]; then

DOWNLOAD_K3S_BIN_URL=https://ghproxy.com/${DOWNLOAD_K3S_BIN_URL}

fi

curl -Lo /usr/local/bin/k3s $DOWNLOAD_K3S_BIN_URL

chmod a+x /usr/local/bin/k3s

curl -Ls https://get.k3s.io | sh -s - server \

--cluster-init \

--token $SERVER_TOKEN \

--node-ip $PRIVATE_IP \

--node-external-ip $PUBLIC_IP \

--advertise-address $PRIVATE_IP \

--service-node-port-range 5432-9876 \

--flannel-backend wireguard-native \

--flannel-external-ip子节点

//安装Docker,一键安装(如有安装可以忽略)

curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun

//开启docker、并设置开机自启

systemctl start docker & systemctl enable docker

//子节点代码

apt update

apt install -y wireguard

echo "net.ipv4.ip_forward = 1" >/etc/sysctl.d/ip_forward.conf

sysctl -p /etc/sysctl.d/ip_forward.conf

export SERVER_IP=43.129.195.33 //此ip填你的主节点地址

export SERVER_TOKEN=r83nui54eg8wihyiteshuo3o43gbf7u9er63o43gbf7uitujg8wihyitr6

export PUBLIC_IP=$(curl -Ls http://metadata.tencentyun.com/latest/meta-data/public-ipv4)

export PRIVATE_IP=$(curl -Ls http://metadata.tencentyun.com/latest/meta-data/local-ipv4)

export INSTALL_K3S_SKIP_DOWNLOAD=true

export DOWNLOAD_K3S_BIN_URL=https://github.com/k3s-io/k3s/releases/download/v1.28.2%2Bk3s1/k3s

if [ $(curl -Ls http://ipip.rehi.org/country_code) == "CN" ]; then

DOWNLOAD_K3S_BIN_URL=https://ghproxy.com/${DOWNLOAD_K3S_BIN_URL}

fi

curl -Lo /usr/local/bin/k3s $DOWNLOAD_K3S_BIN_URL

chmod a+x /usr/local/bin/k3s

curl -Ls https://get.k3s.io | sh -s - agent \

--server https://$SERVER_IP:6443 \

--token $SERVER_TOKEN \

--node-ip $PRIVATE_IP \

--node-external-ip $PUBLIC_IPBlackbox工具部署(也有集群方式)

//拉取镜像

docker pull rehiy/blackbox

//一键启动

docker run -d \

--name blackbox \

--restart always \

--publish 9115:9115 \

--env "NODE_NAME=guangzhou-taozi" \

--env "NODE_OWNER=Taozi" \

--env "NODE_REGION=广州" \

--env "NODE_ISP=TencentCloud" \

--env "NODE_BANNER=From Taozii-www.xiongan.host" \

rehiy/blackbox

//开始注册

docker logs -f blackbox

Grafana、Prometheus部署

在主节点创建一个目录,名字任意,然后在同一目录中创建两个文件(grafpro.yaml、grafpro.sh)

grafpro.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: &name grafpro

labels:

app: *name

spec:

selector:

matchLabels:

app: *name

template:

metadata:

labels:

app: *name

spec:

initContainers:

- name: busybox

image: busybox

command:

- sh

- -c

- |

if [ ! -f /etc/prometheus/prometheus.yml ]; then

cat <<EOF >/etc/prometheus/prometheus.yml

global:

scrape_timeout: 25s

scrape_interval: 1m

evaluation_interval: 1m

scrape_configs:

- job_name: prometheus

static_configs:

- targets:

- 127.0.0.1:9090

EOF

fi

volumeMounts:

- name: *name

subPath: etc

mountPath: /etc/prometheus

containers:

- name: grafana

image: grafana/grafana

securityContext:

runAsUser: 0

ports:

- containerPort: 3000

volumeMounts:

- name: *name

subPath: grafana

mountPath: /var/lib/grafana

- name: prometheus

image: prom/prometheus

securityContext:

runAsUser: 0

ports:

- containerPort: 9090

volumeMounts:

- name: *name

subPath: etc

mountPath: /etc/prometheus

- name: *name

subPath: prometheus

mountPath: /prometheus

volumes:

- name: *name

hostPath:

path: /srv/grafpro

type: DirectoryOrCreate

---

kind: Service

apiVersion: v1

metadata:

name: &name grafpro

labels:

app: *name

spec:

selector:

app: *name

ports:

- name: grafana

port: 3000

targetPort: 3000

- name: prometheus

port: 9090

targetPort: 9090

---

kind: Ingress

apiVersion: networking.k8s.io/v1

metadata:

name: &name grafpro

annotations:

traefik.ingress.kubernetes.io/router.entrypoints: web,websecure

spec:

rules:

- host: grafana.example.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: *name

port:

name: grafana

- host: prometheus.example.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: *name

port:

name: prometheus

tls:

- secretName: defaultgrafpro.sh

//警告:请修改路径和访问域名

# 配置存储路径

export GRAFPRO_STORAGE=${GRAFPRO_STORAGE:-"/srv/grafpro"}

# 配置访问域名

export GRAFANA_DOMAIN=${GRAFPRO_DOMAIN:-"grafana.example.org"}

export PROMETHEUS_DOMAIN=${PROMETHEUS_DOMAIN:-"prometheus.example.org"}

# 修改参数并部署服务

cat grafpro.yaml \

| sed "s#/srv/grafpro#$GRAFPRO_STORAGE#g" \

| sed "s#grafana.example.org#$GRAFANA_DOMAIN#g" \

| sed "s#prometheus.example.org#$PROMETHEUS_DOMAIN#g" \

| kubectl apply -f -部署

chmod +x grafpro.sh

./grafpro.sh测试打开

注意以下,开启端口9115、9090

浏览器打开地址http://grafana.example.org 账号密码都是admin,首次登录,提示修改密码,修改后自动跳到控制台

浏览器打开http://grafana.example.org/connections/datasources/选择第一个,然后编辑URL为:http://127.0.0.1:9090 然后保存

然后选择创建好的Prometheus,导入面板

浏览器打开http://prometheus.example.org,查看信息

配置Promethues任务

//回到主节点的/srv/grafpro/etc目录下

编辑yml文件,备份一下原有的yml,创建新的yml

mv prometheus.yml prometheus00.yml

//以下是yml文件内容(若部署时修改了负载名称blackbox-exporter,下文的配置文件也要做相应的修改)

global:

scrape_timeout: 15s

scrape_interval: 1m

evaluation_interval: 1m

scrape_configs:

# prometheus

- job_name: prometheus

static_configs:

- targets:

- 127.0.0.1:9090

# blackbox_all

- job_name: blackbox_all

static_configs:

- targets:

- blackbox-gz:9115

labels:

region: '广州,腾讯云'

# http_status_gz

- job_name: http_status_gz

metrics_path: /probe

params:

module: [http_2xx] #配置get请求检测

static_configs:

- targets:

- https://www.example.com

labels:

project: 测试1

desc: 测试网站描述1

- targets:

- https://www.example.org

labels:

project: 测试2

desc: 测试网站描述2

basic_auth:

username: ******

password: ******

relabel_configs:

- target_label: region

replacement: '广州,腾讯云'

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox-gz:9115:80然后重启svc,方法如下:首先查看pod

kubectl get pod

然后删除查看到关于grafana的pod,然后稍等几分钟即可

kubectl delete pod *导入 Grafana 仪表盘

下载附件json在Grafana仪表盘里导入即可

导入后可以查看到监控仪已经开始了,显示各项信息